Uber's employment practices have been back in the news again, for all the wrong reasons. A court in Amsterdam, where the ride-sharing company has its European headquarters, ruled that six drivers fired by AI should be given their jobs back. The Guardian reports that the six drivers - five of whom are British - had their contracts terminated by Uber's AI HR technology, which mistakenly accused them of engaging in fraudulent activity.

Uber will be forced to pay over €100,471 in damages and a financial penalty for each day it delays in reinstating the drivers affected by the automated dismissals.

Commenting on this latest judgment, David Sant, a commercial solicitor specialising in data and technology at Harper James, said: 'This case is a high-profile example of a growing issue at the overlap between employment law, workers’ rights and data protection. The Uber ruling poses the important question: to what extent can companies rely on automated processing of data to make decisions that affect employees and workers?

The same issues apply when using automated decision-making for contract termination, as for the use of algorithms in the recruitment process. Employers and hirers need to know that the UK’s data protection laws (including what is now known as the UK GDPR) restrict their ability to make "solely automated decisions”. Under the UK GDPR, it is unlikely that you could ever lawfully make an automated decision to end somebody’s employment or their contract as a worker.

Employers and hirers can use automated processes to make internal recommendations for human review, as long as a human is meaningfully involved at each stage of the decision-making process. In other words, the focus should be on AI-assisted decision-making, rather than AI-only decision-making. Employers and hirers must comply with the data protection principles under the UK GDPR, including that there must be a lawful basis for the processing and that they must always be transparent about any data processing.'

Businesses in the UK first started using facial expression technology alongside artificial intelligence to identify the best candidates in job interviews around December 2019. Applicants are filmed by phone or laptop while asked a set of job-related questions.

The AI technology is then used to analyse candidate response in terms of the language, tone and facial expressions. But use has rocketed since then, particularly during the pandemic which has reduced businesses’ abilities to interview applicants face-to-face.

US giant HireVue, one of the leading providers of AI recruitment software, conducted 12 million interviews entirely using automated processes in 2019. Last year it rose to 19 million and experts predict the numbers are only set to go up and up.

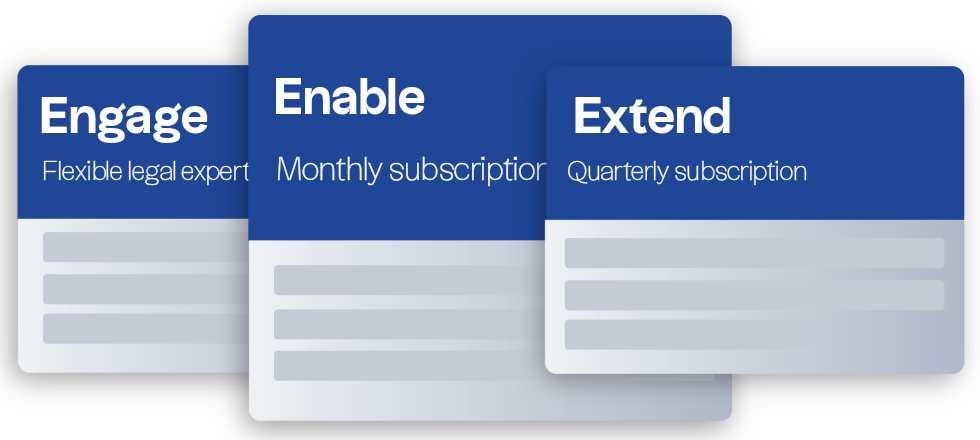

Although the development of AI is bringing opportunities, it also presents risks, not least when the computer says no to a candidate’s application. It is against this backdrop that Harper James Solicitors, the law firm for entrepreneurs, is releasing new advice that any companies considering switching to AI-based recruitment and employment models should keep in mind.

Data protection and technology expert David Sant has prepared eight FAQs to help employers looking to use AI technology in their talent acquisition strategy. David says: 'More and more businesses are now looking to introduce AI-based models to their recruitment and general employment processes. But there are important steps anyone considering making this switch needs to keep in mind at all stages. We can advise employers and HR directors on the checks and balances they need to include in AI-assisted recruitment.'

Jump to:

- What laws are relevant to using AI in recruitment and in the workplace?

- What are the main data protection obligations, risks and rights that employers need to be aware of?

- What does this mean if I want to use software to help in a recruitment process?

- Can I use software to make automatic decisions in the recruitment process, eg to filter out some applicants?

- Can I just get job applicants to consent to solely automated decision-making?

- And what about using software/AI to monitor employee performance?

- What about the risk of bias?

- Can applicants and employees complain about decisions based on software algorithms?

What laws are relevant to using AI in recruitment and in the workplace?

Employment laws and equality laws will of course continue to be fundamental to recruitment and employment practices. And data protection laws have always applied to the recruitment process and employment. But the increasing use of monitoring and evaluation software and even AI means that companies need to have a clear understanding of their data protection obligations, the risks, and the rights of job applicants and employees.

What are the main data protection obligations, risks and rights that employers need to be aware of?

Companies should understand that collecting and reviewing data about job applicants and employees involves data processing under the GDPR. And that means that all of the standard GDPR data protection principles apply. For example, you will need to establish a lawful basis for processing data, be transparent with individuals about the processing, collect only the information you require for the purpose, and keep the data confidential and secure and for no longer than is necessary for the purpose. And employers should be aware that the individual’s rights (eg to request copies of data you hold) apply to job applicants and employees just as they do to customers. You should familiarise yourself with specific rules around automated decision-making (see below).

What does this mean if I want to use software to help in a recruitment process?

Employers will have to make sure the software only collects and processes data that’s relevant to the recruitment process and comply with all of the other data protection principles. You will have to explain to the job applicants what data you are collecting, what you are going to do with it, the purposes of the processing, any third parties involved and other transparency information, for example in a privacy policy. In addition, use of sophisticated AI software means that employers will very likely need to carry out a Data Protection Impact Assessment ('DPIA'). This is because the ICO has identified that AI in recruitment/employment situations is likely to result in a 'high risk to individual rights and freedoms.' The DPIA is a tool to identify and mitigate data protection risks at the outset of a project.

Can I use software to make automatic decisions in the recruitment process, eg to filter out some applicants?

The ICO’s guidance is that unless there is a human involved in the decision-making for every applicant, this is unlikely to be legal. For example, if an algorithm makes the decision to filter out applicants and there is no human review, this is a 'solely automated decision.' Under the GDPR, such decisions are only allowed under certain exemptions. One of those exemptions is where an employee has consented, but this is unlikely to be appropriate for a recruitment scenario (see below). So employers should always ensure that a human is involved at each stage of the decision-making process. In other words, you should consider AI-assisted decision-making, rather than AI-only decision-making.

Can I just get job applicants to consent to solely automated decision-making?

No, not in most cases. This is because consent is only valid if it is freely given. And in the recruitment relationship (and also in an employer/employee relationship), the power imbalance between the parties makes it very hard to show that the consent was freely given.

And what about using software/AI to monitor employee performance?

All of the same issues apply as for recruitment. The data protection principles apply: as an employer, you must establish a lawful basis for the processing, make sure you have given transparency information to employees and must carry out a Data Protection Impact Assessment. Again, it is unlikely that an employer will be able to rely on consent as the lawful basis for the processing. Although you are in a contract with an employee, it’s unlikely that you could justify that the use of monitoring software is necessary for the performance of that contract if there is a less intrusive way of monitoring employee performance. The DPIA process should help employers to address data protection risks and come to the appropriate solution.

What about the risk of bias?

There has been a lot of press about biased algorithms, particularly on the basis of gender or race. For example, Amazon withdrew the use of AI in its recruitment process when it was found to favour men. And Twitter came under fire for an algorithm 'preferring' white faces. The risk of bias is real and can often be because the algorithm has been trained on a set of decisions made by humans, which were themselves biased. So an algorithm might replicate the human bias. Biased decisions in recruitment or employment would be breaches of employment law, equalities law and also data protection law (which requires fair processing and also requires technical and operational measures to be put in place to prevent discrimination.)

Can applicants and employees complain about decisions based on software algorithms?

Individuals can always request transparency information, including information about what data has been collected and processed and for what purposes. In the case of solely automated decision-making, individuals must be informed that you are using their data for solely automated decision-making and they also have a right to meaningful information about the logic and significance of the consequences of the decision. And they will also have a right to request a review of the decision by someone who has the authority to change it.